WALLABY Pre-pilot Survey: The Effects of Tidal Interaction on Radial Distribution of Color in Galaxies of the Eridanus Supergroup.

Wang et al. 2022. ApJ, DOI:10.3847/1538-4357/ac4270

The Westerbork Coma Survey. A blind, deep, high-resolution H I survey of the Coma cluster.

Molnár et al. 2022 A&A, DOI: 10.1051/0004-6361/202142614

Dpt. Astronomía Extragaláctica

Instituto Astrofísica Andalucía

Glorieta de la Astronomía s/n

18008 Granada

Spain

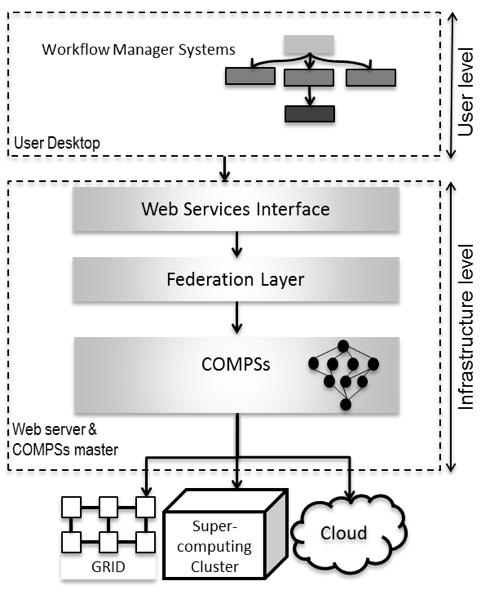

Optimization of the use of Distributed Computing InfrastructuresThe exponential increase of the data volume generated by state of the art astrophysical instruments like ALMA ~1PB/year, MWA ~ 3PB/year or LOFAR ~ 6PB/year, as well as the forthcoming SKA1 ~ 100PB/year, is changing the way in which astronomers access and share the data. The trend is that astronomers make use of Distributed Computing Infrastructures (DCIs) like e.g. Grid or Cloud, for accessing and analysing the generated data. AMIGA aims to contribute to an efficient exploitation of these DCIs. For this, some of its developments explore ways for optimizing the use of the resources from these infrastructures by lowering the technical barriers that their use implies. Web services as Building Blocks for Science Gateways in Astrophysics Web services as Building Blocks for Science Gateways in AstrophysicsAs part of our efforts to provide astronomers with advanced tools that facilitate the efficient exploitation of Distributed Computing Infrastructures (DCIs), we have performed a work published in Sánchez-Expósito et al. (2017). In this work, we have adopted an approach used in the Life Sciences domain and we have applied it to the Astrophysics domain, using as an example a set of analysis tasks of interest for some use cases of the SKA aiming to perform kinematical studies of galaxies. In this approach, computing and scientific applications are available as-a-Service as well as empowered by the programming model developed by the Barcelona Supercomputing Center (BSC) called COMPSs, which allows to achieve an efficient exploitation of the computing resources. COMPSs orchestrates in a workflow the tasks involved in the service, submitting them as concurrently as possible to DCIs. The COMPSs workflows are exposed as web services that can be combined by scientists through graphical workflow manager systems like the Taverna Workbench. The resulting method is presented as a two-level workflow system: at the user level, workflows are built upon web services, while those services turn out to be workflows as well at the infrastructure level. Two-Level Workflow System Architecture

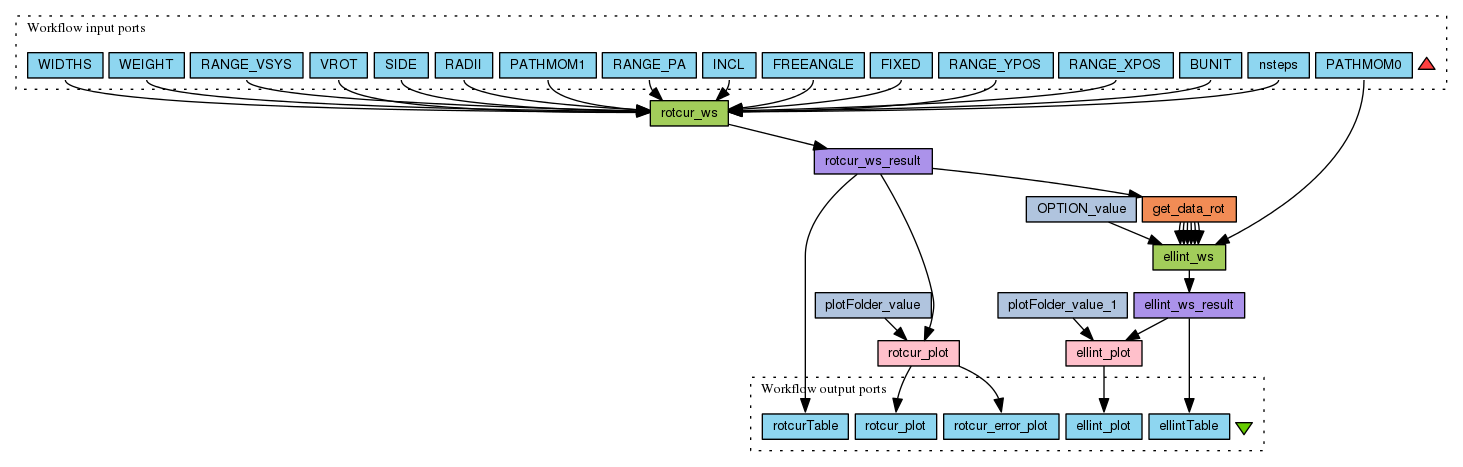

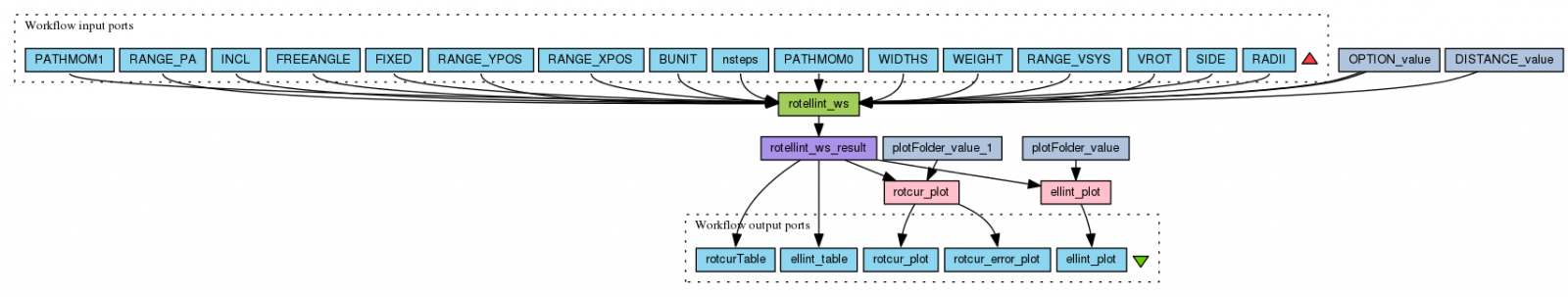

Science Use Case The experiment implemented in this paper is based on The Groningen Image Processing System (GIPSY). In particular, it involves three GIPSY tasks: ROTCUR, ELLINT and GALMOD. ROTCUR derives the kinematical parameters of a galaxy, including the rotation curve; ELLINT generates the radial profile of the galaxy emission taking as input the results provided by ROTCUR; GALMOD builds a model cube from the kinematical and geometrical parameters, as well as the emission profile issued by ROTCUR and ELLINT respectively. Users provide a set of input data, some of them compiled from external catalogues or derived by visual inspection of the data. It is a common practice to test different values of them by sweeping a small range, so as to generate several models. Then the astronomer assesses which model fits the original data better. Examples of the implemented services and workflows for supporting the kinematical modelling of galaxies For implementing these services, GIPSY was previously ported to different DCIs:

Taverna workflow rotcur_ellint_wf. This workflow calls the rotcur_ws service, which internally executes the task ROTCUR. Then, it gets the kinematical parameters of the galaxy by means of a python script (get_data_rot) that processes the rocur_ws results. These kinematical parameters are received by the ellint_ws service, which executes the task ELLINT. Finally, two nested workflows (rotcur_plot and ellint _plot) will plot the rotcur_ws and ellint_ws results.

Taverna workflow rotellint_wf. This workflow calls the rotellint_ws service, which executes internally the task ROTCUR, then processes its output data, calculating the average of the kinematical parameters of the central rings of the galaxy, and finally passes the resulting values to the task ELLINT, as input data. Finally, two nested workflows (rotcur_plot and ellint_plot) will plot the rotellint_ws results. |